Comparing training window selection methods for prediction in non-stationary time series

13 Jan , 2026·,,,,,,

Fridtjof Petersen

Jonas Haslbeck

Jorge N. Tendeiro

Anna M. Langener

Martien J.H. Kas

Dimitris Rizopoulos

Laura Bringmann

Abstract

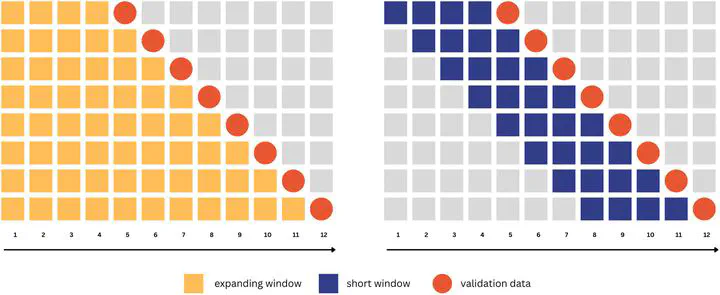

The widespread adoption of smartphones creates the possibility to passively monitor everyday behaviour via sensors. Sensor data have been linked to moment-to-moment psychological symptoms and mood of individuals and thus could alleviate the burden associated with repeated measurement of symptoms. Additionally, psychological care could be improved by predicting moments of high psychopathology and providing immediate interventions. Current research assumes that the relationship between sensor data and psychological symptoms is constant over time – or changes with a fixed rate: Models are trained on all past data or on a fixed window, without comparing different window sizes with each other. This is problematic as choosing the wrong training window can negatively impact prediction accuracy, especially if the underlying rate of change is varying. As a potential solution we compare different methodologies for choosing the correct window size ranging from frequent practice based on heuristics to super learning approaches. In a simulation study, we vary the rate of change in the underlying relationship form over time. We show that even computing a simple average across different windows can help reduce the prediction error rather than selecting a single best window for both simulated and real world data.

Type

Publication

British Journal of Mathematical and Statistical Psychology